.NET, Cloud & Software architecture

We’re working on the next chapter of this conference. More details coming soon.

Conference month: September 2026

How will software development evolve in the 2020s? Join Richard Campbell as he explores the landscape of technology that will have a massive impact on software development over the next ten years. What new devices are coming? Will Artificial Intelligence take over everything? How will people connect to the web in the next ten years? And what about Quantum Computing? All these topics and more will shape our future!

Imagine a world in which you could build a distributed system that guarantees that every individual user action travels throughout a multitude of services without ever being lost or duplicated. No lost orders. No duplicate entries that require reviews. No persistent exceptions due to data inconsistencies.

If you're imagining rainbows and unicorns right now, this session is for you!

Before going into specifics, we need to stop thinking about message delivery and focus on message processing instead. We'll explore an end-to-end approach to message processing, starting from the human-to-machine interaction in the front end of the system and traversing component-to-component message-based communication throughout the system.

As a cherry on top, I'll present you with a fully operable example showcasing cross-system, exactly-once, HTTP-based communication.

Ready to explore what's over the rainbow?

Combining the power of Kubernetes and MLOps brings scalability, reliability, and reproducibility to generative AI workflows. In this session, we will explore how Kubernetes enables the orchestration of distributed generative AI training and inference pipelines, while MLOps practices ensure efficient model development, deployment, and monitoring. Join us to discover how this combination empowers organizations to unlock the full potential of generative AI while achieving seamless scalability and operational excellence.

We know it’s vital that code executed at scale performs well. But how do we know if our performance optimizations actually make it faster? Fortunately, we have powerful tools which help—BenchmarkDotNet is a .NET library for benchmarking optimizations, with many simple examples to help get started. In most systems, the code we need to optimize is rarely straightforward. It contains assumptions we need to discover before we even know what to improve. The code is hard to isolate. It has dependencies, which may or may not be relevant to optimization. And even when we’ve decided what to optimize, it’s hard to reliably benchmark the before and after. Only measurements can tell us if our changes actually make things faster. Without them, we could even make things slower, without realizing. Understanding how to create benchmarks is the tip of the iceberg. In this talk, you'll also learn how to:

- Identify areas of improvement which optimize the effort-to-value ratio

- Isolate code to make its performance measurable without extensive refactoring - Apply the performance loop of measuring, changing and validating to ensure performance actually improves and nothing breaks

- Gradually become more “performance aware” without costing an arm and a leg

You've likely heard about OpenTelemetry and are either starting to use it, or thinking about using it in your applications as you should! But how do you use it effectively, how should you set things up, what spans or activities should you create, how should you name them?

In this talk we'll cover:

* Codeless instrumentation

* Getting automatic spans from popular libraries

* What application context is important in your observability

* Different setup techniques

* Using OpenTelemetry in messaging systems like Azure ServiceBus and Kafka

* How to export your telemetry signals to an Free OpenSource backend

* How K8s can make observability simpler

This will be a talk about best practices, tips and tricks for getting the most out of OpenTelemetry.

Workshops

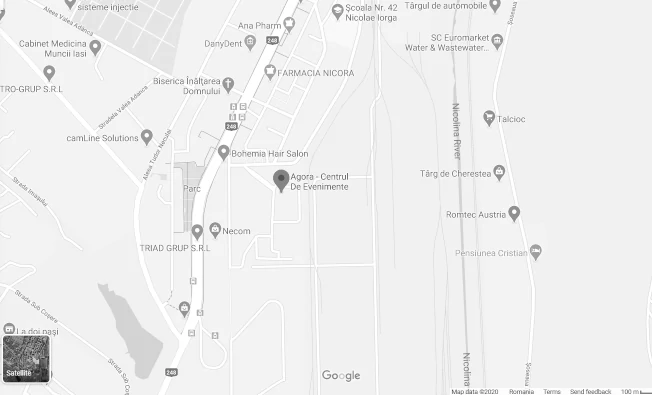

Where to stay in Iași

Hotel Unirea

Email their reception([email protected]), communicate the special code: dotnetdays and get special prices for accomodation

Hotel International

Go on the website, apply the code: dotnetdays and get special prices